- Uncover business-critical issues hidden in support conversations

- Replace disconnected metrics with insights that drive action

- Equip teams across product, ops, and support with data that matters

- Connect the dots between customer experience and company outcomes

Increase in CSAT

30%

Decrease in agent ramp time

120%

Increase in monthly coaching sessions

300%

Applying a growth mindset in QA can lead to continual improvements in customer experience and CSAT.

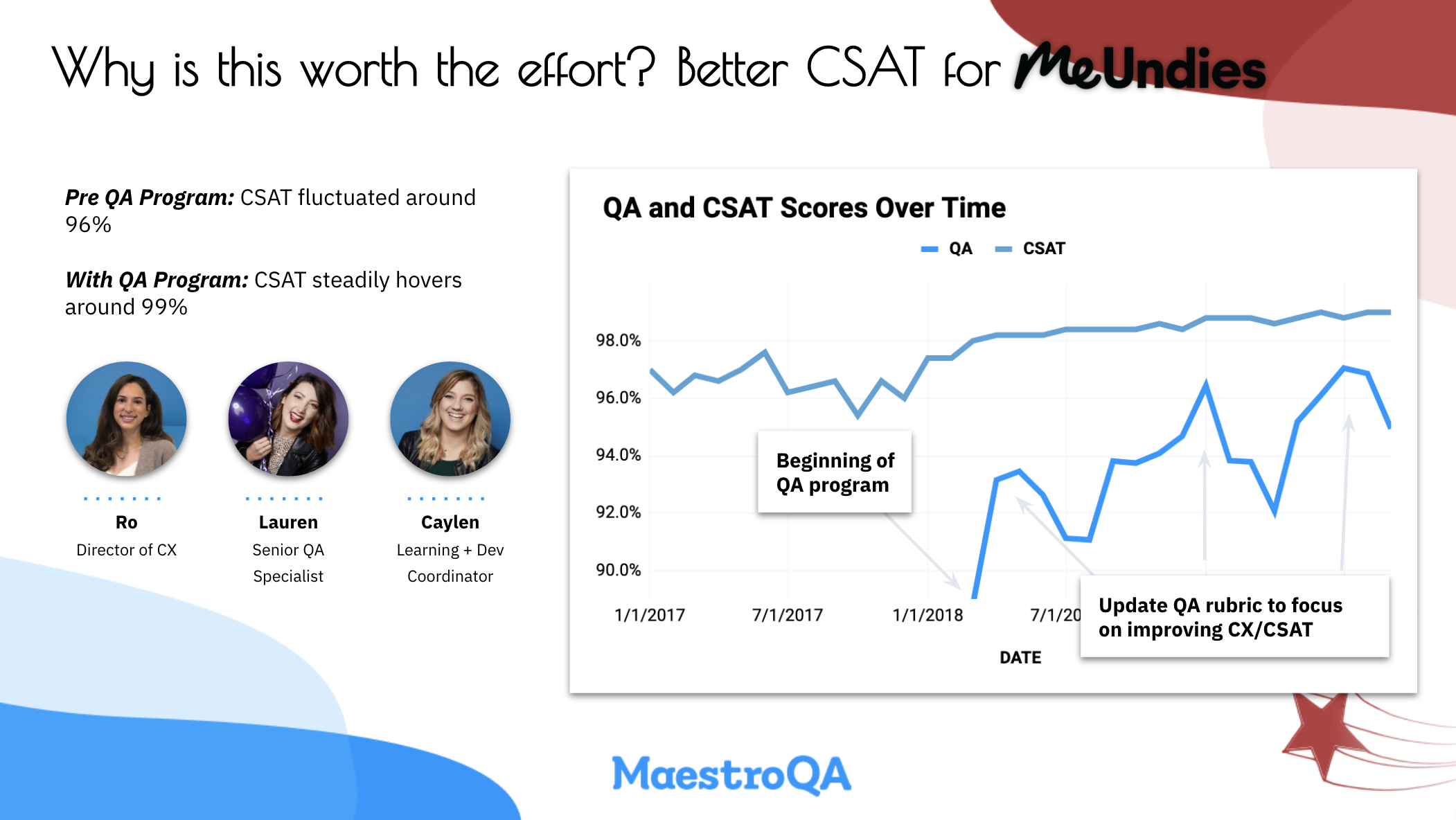

MeUndies, a direct-to-consumer underwear and apparel company, has seen this first-hand, as they’ve updated scorecards and raised standards for both their HQ team and Philippines team (~75 agents) multiple times in the past few years.

When MeUndies started their QA program, their CSAT was ~96%. Their first QA scorecard led to meaningful improvements in CSAT scores. They were continually reinforcing interactions against a standard of quality, and it worked!

But after a while, CSAT plateaued (and so did their QA score). In other words, their agents had “mastered” that QA scorecard, and their scorecard was no longer leading to additional improvements in customers’ experiences – it was maintaining a level of CX that MeUndies knew could be better.

As you’d expect out of a team so focused on growing, they reassessed what their quality standards were, and updated them to better reflect the type of support they knew customers would love.

After two years, their CSAT has improved to 99%, largely due to their mindset about QA as the vehicle to improve customers’ experiences.

Here’s how they think about changing their scorecard, and the impact that their quality program has had on CSAT.

Challenge: When QA score is no longer moving the needle on CSAT

The thing that makes MeUndies different from other programs that we have seen, is that we are truly never happy with where we are at.

Just because their CSAT isn’t bad, doesn’t mean there aren't areas for improvement. Until every interaction is a positive CSAT interaction, there is work to be done!

In their last big revisit, they (Ro, Lauren, Caylen👆) took a handful of tickets with a “100%” QA score, and they dove in to see, “Is this ticket really deserving of 100%?” To answer that, each wrote out what their own version of a “perfect” response would be.

They discovered the common threads between their responses, and nailed down the major characteristics that they agreed upon as being indicative of quality. They looked back at the original tickets and re-graded it under this new perspective. The score dropped to about 65%.

Solution #1: Update the QA scorecard to focus on current weaknesses

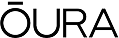

A great example of a change we made is around Brand Voice.

Brand voice is a huge part of how we interact with customers. Not only do we have to teach something that is not super tangible, but we have to teach agents who live in a different country how to grasp a very “California” way of communicating.

Originally, we had a broad statement on our scorecard like, “Portrayed the MeUndies brand voice in interaction,” and it was worth a lot of points. But because it was so broad, there was a lot of room for different interpretations and questions, and it was hard for agents to know what to do.

Agents did not have a great grasp of brand voice, and our scorecard wasn’t helping that. We had to come up with a way to teach brand voice (something that tends to be intangible) and make it more tangible. We defined four major characteristics of our brand voice based on a house system (yes, like Harry Potter)! We had agents take a personality quiz to find their dominant house, and provided pop culture references for each house. This gave the agents a tangible hold on what the characteristics we are looking for are.

Then, we tied them into how they should reflect in our customer interactions. Each of these characteristics is reflected in our scorecard as non-graded feedback (checkboxes) to constantly remind the agent what characteristics they are best utilizing, and which ones require more focus, without impacting their overall grade.

Now agents can see in their scorecard which pieces they need to work on, and why. It was about both providing the correct resources and training, and making sure the rubric reinforces that to make the team successful.

Solution #2: Make scorecard changes actionable through training and QA

In terms of the feedback loop, it starts with Lauren and her QA team. They go through hundreds of agent and customer interactions every week, then provide the L&D team with a monthly report on learning and development opportunities. Primarily they report on the most commonly missed questions.

Caylen will then take those opportunities and build actionable lessons in our L&D platform: CheekSquad University, and distribute them to the team.

Getting Agent Buy-In

I think the majority of support professionals can identify with one of our primary struggles: getting your entire team to embrace a Quality Assurance program and be “OK’ with changes. To be perfectly honest, QA is generally not considered a popular program within any support team. If that’s not the case with your team, err... let’s talk! But the truth is, our agents aren’t always 100% on board with the changes that are made.

Generally speaking, many agents view our QA specialists as being overly critical, nit-picky and merciless. This is especially true when we make either a change to one of our scorecards. What our agents tend to disregard is the purpose of our QA team and program, which is to help our agents transform into agent superstars.

At the base level, one thing we do is incentivize based on averages – if the average QA scores across the board are lower than they were last month, there is still the opportunity to achieve your normal bonus based on where you fall relative to the mean. This helps the agents buy in at the base level.

But as a leadership team, WE are okay with taking a hit in our QA metrics, because we have seen it’s proven to churn out better work from our agents. And no matter what QA change we have done, the scores always bump back up to where they were before. Regardless if it is a scorecard change, or some sort of operational challenge that impacts our interactions, our high standards make it so we bounce back faster. And even more importantly, we are able to see who truly embraces the changes. Who is willing to admit they aren’t perfect, accept feedback, and push themselves to be better? These are our agents who end up being promoted.

Impact: Agent buy-in to growth mindset around QA, continuous CSAT improvement

What really gets us through the tough times are the moments when it “clicks” with the team. The best example I can give is when an agent is consistently struggling with their CSAT scores and they not only embrace the feedback they receive with their QA specialist, but also seek out 1:1 time with the QA team as well. When they take the feedback and turn it into action, they almost always see a positive change in CSAT scores. That’s when we start to see agent “buy-in” with our QA program.

Through our QA program, we have really been able to set new standards for ourselves. And it has played a huge role in our CSAT. Not just in the actual metrics, but the ideology behind it. Everyone on the team knows the level we are striving for, and works hard to meet it. That’s probably the biggest change we have seen over the recent months. We have seen the agents and our TL’s really grasp onto the growth mindset. In pushing themselves to always strive for improvement, both on and individual level, and as a team. It’s a true sign that our obsession with growth has fully become part of the Cheeksquad culture and DNA.